When it comes to online games, we all know the “report” button doesn’t do anything. Regardless of genre, publisher or budget, games launch every day with ineffective systems for reporting abusive players, and some of the largest titles in the world exist in a constant state of apology for harboring toxic environments. Franchises including League of Legends, Call of Duty, Counter-Strike, Dota 2, Overwatch, Ark and Valorant have such hostile communities that this reputation is part of their brands — suggesting these titles to new players includes a warning about the vitriol they’ll experience in chat.

It feels like the report button often sends complaints directly into a trash can, which is then set on fire quarterly by the one-person moderation department. According to legendary Quake and Doom esports per Dennis Fong (better known than Thresh), that’s not far from the truth at many AAA studios.

“I’m not gonna name names, but some of the biggest games in the world were like, you know, honestly it does go nowhere,” Fong said. “It goes to an inbox that no one looks at. You feel that as a gamer, right? You feel despondent because you’re like, I’ve reported the same guy 15 times and nothing’s happened.”

Game developers and publishers have had decades to figure out how to combat player toxicity on their own, but they still haven’t. So, Fong did.

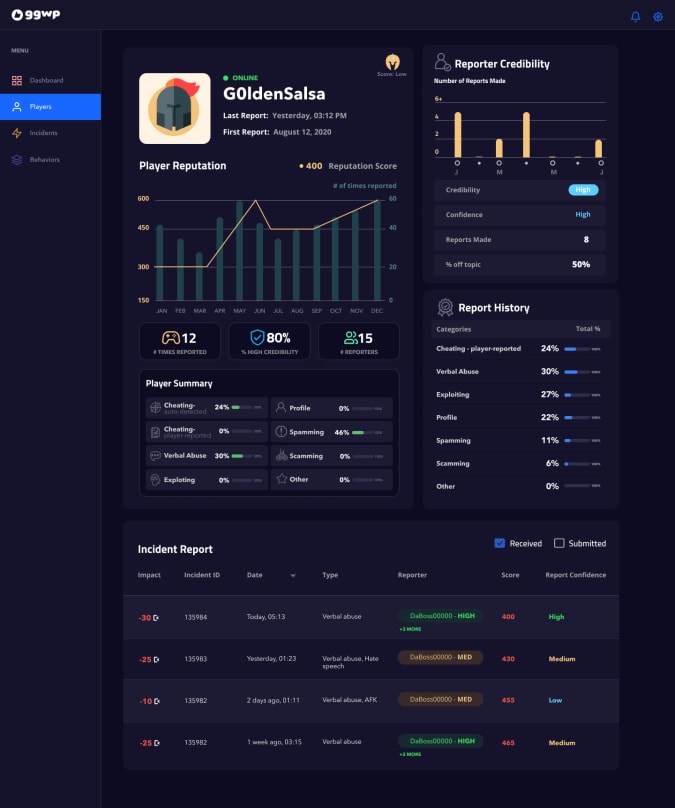

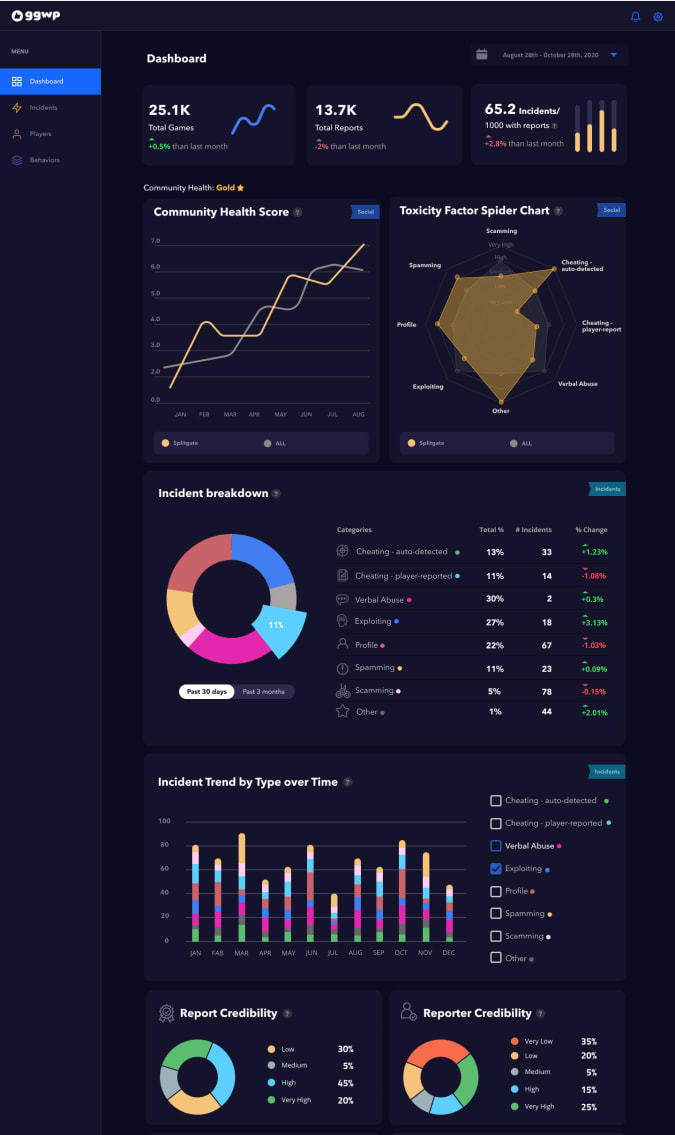

This week he announced GGWP, an AI-powered system that collects and organizes player-behavior data in any game, allowing developers to address every incoming report with a mix of automated responses and real-person reviews. Once it’s introduced to a game — “Literally it’s like a line of code,” Fong said — the GGWP API aggregates player data to generate a community health score and break down the types of toxicity common to that title. After all, every game is a big snowflake when it comes to in-chat abuse.

GGWP

The system can also assign reputation scores to individual players, based on an AI-led analysis of reported matches and a complex understanding of each game’s culture. Developers can then assign responses to certain reputation scores and even specific behaviors, warning players about a dip in their ratings or just breaking out the ban hammer. The system is fully customizable, allowing a title like Call of Duty: Warzone to have different rules than, say, Roblox.

“We very quickly realized that, first of all, a lot of these reports are the same,” Fong said. “And because of that, you can actually use big data and artificial intelligence in ways to help triage this stuff. The vast majority of this stuff is actually almost perfectly primed for AI to go tackle this problem. And it’s just people just haven’t got around to it yet.”

GGWP is the brainchild of Fong, Crunchyroll founder Kun Gao, and data and AI expert Dr. George Ng It’s so far secured $12 million in seed funding, backed by Sony Innovation Fund, Riot Games, YouTube founder Steve Chen, the streamer Pokimane, and Twitch creators Emmett Shear and Kevin Lin, among other investors.

GGWP

Fong and his cohorts started building GGWP more than a year ago, and given their ties to the industry, they were able to sit down with AAA studio executives and ask why moderation was such a persistent issue. The problem, they discovered, was twofold: First, these studios didn’t see toxicity as a problem they created, so they weren’t taking responsibility for it (we can call this the Zuckerberg Special). And second, there was simply too much abuse to manage.

In just one year, one major game received more than 200 million player-submitted reports, Fong said. Several other studio heads he spoke with shared figures in the nine digits as well, with players generating hundreds of millions of reports annually per title. And the problem was even larger than that.

“If you’re getting 200 million for one game of players reporting each other, the scale of the problem is so monumentally large,” Fong said. “Because as we just talked about, people have given up because it doesn’t go anywhere. They just stop reporting people.”

Executives told Fong they simply couldn’t hire enough people to keep up. What’s more, they generally weren’t interested in forming a team just to craft an automated solution — if they had AI people on staff, they wanted them building the game, not a moderation system.

In the end, most AAA studios ended up dealing with about 0.1 percent of the reports they received each year, and their moderation teams tended to be laughably small, Fong discovered.

GGWP

“Some of the biggest publishers in the world, their anti-toxicity player behavior teams are less than 10 people in total,” Fong said. “Our team is 35. It’s 35 and it’s all product and engineering and data scientists. So we as a team are larger than almost every global publisher’s team, which is kind of sad. We are very much devoted and committed to trying to help solve this problem.”

Fong wants GGWP to introduce a new way of thinking about moderation in games, with a focus on implementing teachable moments, rather than straight punishment. The system is able to recognize helpful behavior like sharing weapons and reviving teammates under adverse conditions, and can apply bonuses to that player’s reputation score in response. It would also allow developers to implement real-time in-game notifications, like an alert that says, “you’ve lost 3 reputation points” when a player uses an unacceptable word. This would hopefully dissuade them from saying the word again, decreasing the number of overall reports for that game, Fong said. A studio would have to do a little extra work to implement such a notification system, but GGWP can handle it, according to Fong.

“We’ve completely modernized the approach to moderation,” he said. “They just have to be willing to give it a try.”

All products recommended by Engadget are selected by our editorial team, independent of our parent company. Some of our stories include affiliate links. If you buy something through one of these links, we may earn an affiliate commission.