Last December, Twitter ran a limited test in the US for a new Report Tweet flow — designed to improve the process of flagging offensive or dangerous content on site by adding more options for explaining whatever the issue happened to be. The test worked: the number of actionable reports increased by 50%, leading to Twitter removing more bad stuff. Now, Twitter’s using that learning to update the feature globally: the new Report Tweet update is rolling out around the world.

From our tests, it looks like it’s still only in English, and it hasn’t turned on any localized versions of the report that might respond to issues more common in one region over another. (We’ve reached out to Twitter to ask whether either of those will be getting addressed in the future.)

The switch is coming at a key moment for the company. Content moderation has been a massive headache for social media companies, and they’ve had to face federal investigations in several countries scrutinizing their efforts.

Many moderators who are hired on contract are not working in safe conditions, and they have to go through multiple posts in an hour. And the format of the submitted report for a post might not help them give the full context of the situation, forcing them to ignore the report or take the wrong action.

On top of all of that, Twitter in particular has faced a lot of headaches over this issue. Not only is it a go-to platform for many when it comes to hot takes — a honeypot when it comes to trolls and offensive commenting overall — but the company is regularly slammed for being slow to respond to people’s complaints, both those related to reporting tweets, or making any kind of updates to the Twitter product itself.

But Twitter — now in the middle of a would-be acquisition process that has in part highlighted suspect content and accounts hosted on the platform, and which could itself spur a significant change in its moderation policies — we want to know that it is On It.

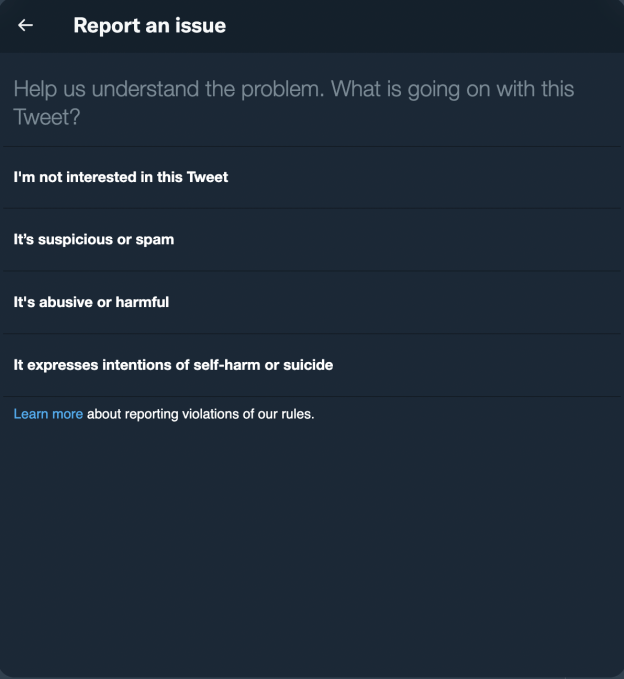

The new Report Tweet process allows you to provide more context when you report a tweet. In the earlier reporting flow, you had limited choices in terms of categories describing why a post should be taken down, and whom it affects. And it focused more on you figuring out what rule a tweet might be violating.

Options for reporting categories in the older flow

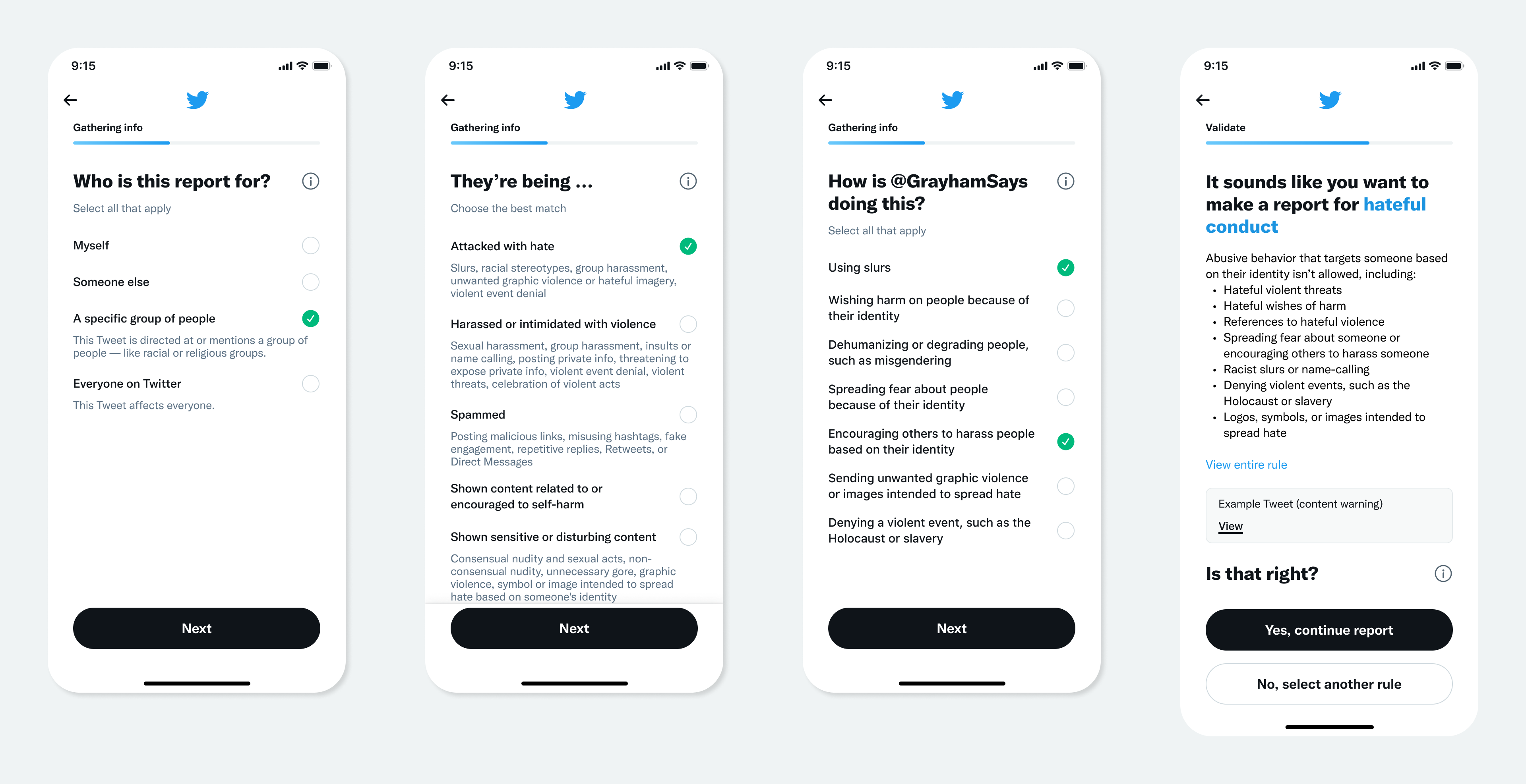

Twitter’s new reporting process guides you better by letting you give more information on how a tweet could be affecting someone. Plus, you can select multiple options to frame the context of the allegedly abusive tweet. Each screen has a detailed description of the step and the options available on that page.

New reporting flow on Twitter

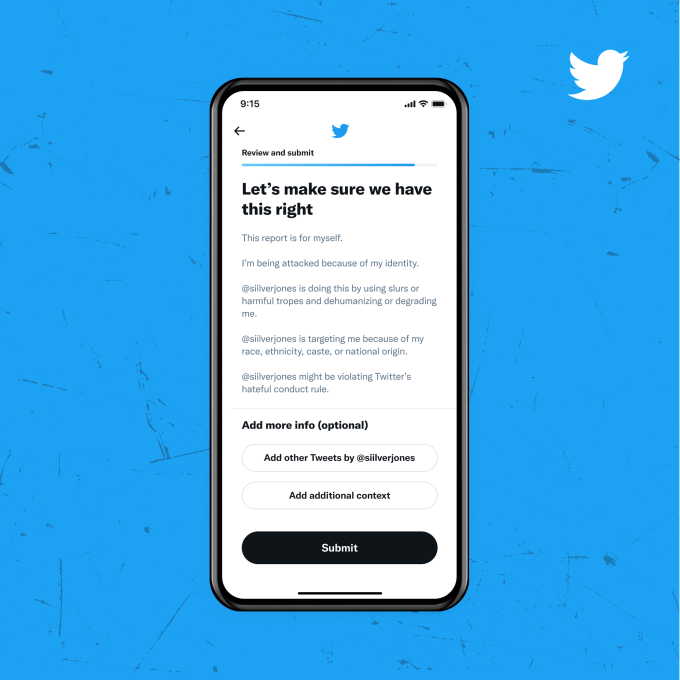

At the end of the process, Twitter summarizes your complaint to indicate what rule the reported tweet might be breaking. If you think it’s not the right rule, you can select another rule. What’s more, Twitter also lets you provide additional context through a text box.

Twitter now lets you provide additional context for your report

The update should make it considerably easier to report for more people. For one, the previous incarnation of the experience proved to be annoying for people to figure out what policy a tweet might be violating when they might have seen something disturbing or want to report harassment.

“What can be frustrating and complex about reporting is that we enforce based on terms of service violations as defined by the Twitter Rules. The vast majority of what people are reporting fall within a much larger gray spectrum that doesn’t meet the specific criteria of Twitter violations, but they’re still reporting what they are experiencing as deeply problematic and highly upsetting,” senior UX manager said Renna Al Yassini said in December.

The company said it worked with different designers, researchers, and writers to thoughtfully create this new flow. With this change, Twitter plans to use this new reporting data to identify more trends and categories of harassment and spam.

The new process’s effectiveness will be put to test when people in different geographies and cultural contexts use it when trying to justify if a tweet is abusive.

Twitter’s new reporting process is available on iOS, Android, and the web.