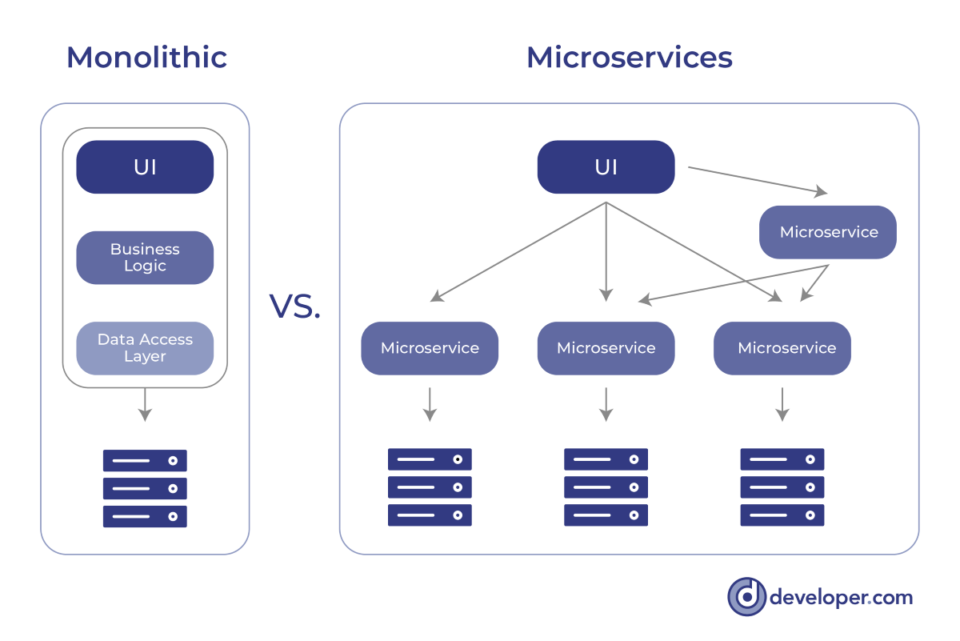

All components of a monolithic application are generally designed, deployed, and scaled as one unit. The deployment of such an application is often painless. When you implement microservices, you may have many interconnected services built in various languages and frameworks thus making deployment more of a challenge.

In this programming tutorial we will talk about the deployment patterns in microservices architecture, and the benefits and downsides of each.

Reading: Top collaboration tools for developers

What are Microservice Deployment Patterns?

There are a few patterns available for deploying microservices. These include the following:

- Service Instance per Host: including Service Instance per Container and Service Instance per Virtual Machine.

- Multiple service instances per host

In the sections that follow, we will examine these microservice deployment patterns, their benefits, and drawbacks for software development.

Multiple Service Instances Per Host

The Multiple Service Instances per Host pattern involves provisioning one or more physical or virtual hosts. Each of the hosts then executes multiple services. In this pattern, there are two variants. Each service instance is a process in one of these variants. In another variant of this pattern, more than one service instance might run simultaneously.

One of the most beneficial features of this pattern is its efficiency in terms of resources, as well as its seamless deployment. This pattern has the benefit of having a low overhead, making it possible to start the service quickly.

This pattern has the major drawback of requiring a service instance to run in isolation as a separate process. The resource consumption of each instance of a service becomes difficult to determine and monitor when several processes are deployed in the same process.

Service instance per host pattern

The Service Instance per Host pattern is a deployment strategy in which only one microservice instance can execute on a particular host at a specific time. Note that the host can be a virtual machine or a container running just one service instance simultaneously.

There are a couple of variants of this deployment pattern:

- Service instance per virtual machine

- Service instance per container

Service Instance Per Virtual Machine

As the name implies, this pattern is used to package each microservice as a virtual machine image. Therefore, each instance of the service runs as a separate virtual machine. Developers can use this pattern to scale their service effortlessly—they only need to increase the number of service instances.

This deployment pattern allows service instances to be scaled independently of other services. This allows each service to have its own resources dedicated to it and enables programmers to scale up or down as needed based on your application usage patterns.

Multiple services can be deployed into a single VM by giving them different ports on which they listen for requests and respond back with data.

The isolation of each service instance is one of the most significant advantages. In addition, you can use cloud infrastructure features that include load balancing and autoscaling. The implementation details (ie, the technical intricacies of service implementation) are encapsulated.

The most significant disadvantage to this pattern is that it consumes a lot of resources and takes quite some time to build and manage virtual machines.

Service Instance Per Container

The Service Instances per Container pattern offers many of the advantages of Virtual Machines while being lighter-weight and a more efficient alternative. The microservice instances in this pattern run in their own containers.

This pattern is ideal for microservices that do not require much memory or CPU power. It uses the Docker container runtime and supports deploying multiple instances of each microservice in a single container. This enables you to use resources more efficiently and allows you to scale up and down as needed, reducing unnecessary expense on unused resources.

This is the most simple and seamless way to deploy microservices in containers. It’s a simple approach to running one instance of your entire microservice in each container. This means that each container has its database and runs on its process.

Containers promote fast application start-up and scale-up and need much fewer resources compared to virtual machines.

The Service Instance per Container Pattern provides support for simplified scalability and deployment, while isolating service instances. A container image can be built quickly, and you can also manage the containers with ease.

However, there are some drawbacks associated with this approach:

- Programmers must manually update their containers when new versions become available in order to take advantage of any bug fixes or new features provided by that version. If you are running multiple instances of each microservice within a single container, then updating them all at once would be time-consuming and prone to error.

- Deploying updates can sometimes be problematic if they are applied while the application is running live due to potential adverse effects on user experience such as downtime or data loss.

- Despite the fact that container technology is rapidly evolving, they are still not as mature as Virtual Machines are. Containers are also not as secure as Virtual Machines as well – they share the OS Kernel.

Serverless deployment for microservices

One of the most popular ways to deploy microservices is in a serverless environment, where you do not have to worry about how many servers are being used or how much resources they are consuming. This allows developers to focus on writing code, instead of worrying about how many servers you need to run your application on.

Serverless is an architecture in which a cloud provider takes on responsibility for the server infrastructure, so developers don’t have to worry about managing it themselves. Serverless environments make it much easier to deploy microservices because they allow you to focus on building your application functionality instead of worrying about the underlying infrastructure.

Serverless architectures have several benefits, including improved scalability and flexibility, reduced pricing, and increased developer productivity.

The term “Serverless” implies that there are no servers so you need not manage any server – you pay only for what you use—so if your app is not being used or is not receiving traffic, your bill won’t be affected by that . The benefit of this model is that it lets developers focus on building their apps and not worry about how they are going to scale in the future or how much each server costs them every month.

Serverless computing enables microservices deployment in several ways: it reduces costs by cutting down on infrastructure management overhead; it reduces risk by allowing teams to move faster because they don’t have to worry about scaling up their systems before launching new features; and it increases agility by enabling teams to focus more directly on building products rather than worrying about how those products will scale once they’re live.

It is also easier than ever before to deploy microservices because they do not require any servers.

Reading: Serverless functions versus microservices

Final Thoughts on Deployment Patterns in Microservices

Microservices architecture is a way of breaking down the application into smaller, more manageable parts. These parts can then be deployed on different servers, which makes it easier to scale the system and increase performance as needed.

Deploying microservices based applications is challenging for reasons aplenty. However, there are several strategies for deploying microservice-based applications. Before you select the right deployment strategy for your application, you must be aware of the business objectives and goals.

Reading: The Best Tools for Remote Developers